In the last post, “Automation Executes Tasks. Agentic AI Executes Outcomes,” we looked at why traditional automation has hit its limit in today’s go-to-market strategies. Automation sped up work, but it wasn’t built to manage results across complex, full-funnel environments. Agentic AI changes this by focusing on achieving outcomes, not just completing tasks.

This change brings up a new challenge right away.

If AI is going to participate directly in GTM execution, making decisions, prioritizing actions, and acting across systems, how do organizations maintain control without slowing everything down?

For years, governance was seen as a tradeoff. It helped reduce risk, enforce compliance, and protect data, but often slowed things down. In many GTM teams, governance only stepped in after problems happened or things got too big to manage. Now, Agentic AI is making organizations rethink this approach.

Governance is no longer holding back innovation. Instead, it’s becoming the tool that lets organizations move faster while keeping trust, visibility, and accountability.

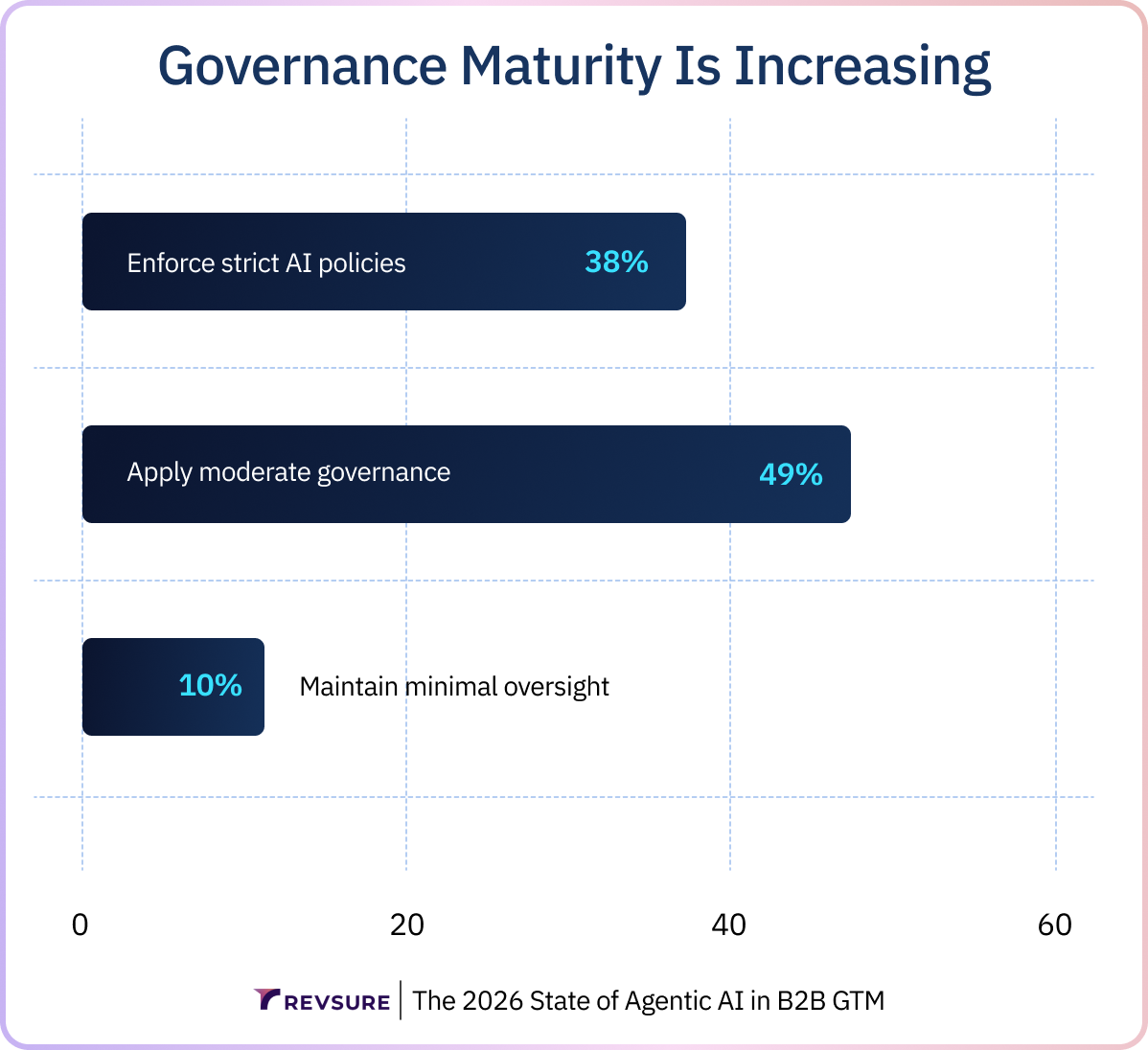

That shift is already showing up in how organizations are governing AI in practice. In the research, governance maturity is clearly increasing across GTM teams: 38% of organizations now enforce strict AI policies, while another 49% apply moderate governance frameworks. Only 10% report maintaining minimal oversight.

This is an important signal. Most companies are not entering the agentic era casually or without guardrails. They are actively putting the policy structures, review loops, and accountability models in place that make autonomous execution possible. Governance is no longer something that sits outside GTM execution; it is becoming the foundation that allows AI to operate with trust and consistency.

Why Governance Became a Constraint and Why That’s Changing

Governance got its reputation for causing friction for good reason. Policies were often added on top of already messy systems. Approval steps slowed things down, but didn’t always help results. Oversight was reactive, not built-in. Teams often found ways to work around governance instead of with it. But according to RevSure’s 2026 State of Agentic AI in B2B GTM research, today’s hesitation about Agentic AI isn’t about resisting innovation. It’s about fear of not having enough transparency.

Leaders say their biggest barriers to scaling Agentic AI are security and privacy concerns (54%), questions about accuracy and reliability (47%), and challenges with data integration (44%). Also, 77% worry that without alignment, Agentic AI could turn into another isolated part of the business.

These concerns don’t mean organizations don’t want autonomy. They want clarity. The question isn’t whether AI should act, but whether it can act in ways that are still understandable, auditable, and governed. This difference is important. It shifts governance from being defensive to being supportive.

Autonomy Scales Only When Structure Is Explicit

One of the most counterintuitive lessons of the Agentic Era is that autonomy does not reduce the need for structure. It increases it. Agentic AI systems reason, prioritize, and act based on context. That requires organizations to be explicit about what outcomes matter, which tradeoffs are acceptable, and where human oversight is required. Ambiguity that humans can work around becomes a risk when execution is delegated to autonomous systems.

That’s why governance is now seen as something you need to scale, not something that holds you back. In the research, 97% of leaders say they’re confident they can scale AI responsibly. This shows a change in thinking. More leaders now view governance as the discipline that makes progress possible again and again.

When governance is planned carefully, it actually makes things smoother. It cuts down on debates by making decision rights clear. It speeds up execution by setting rules ahead of time. It also lets AI act without needing people to step in all the time. In this setup, governance doesn’t limit autonomy—it makes it safe.

What Governed Autonomy Looks Like in Practice

Governance matters most when it answers real, practical questions about how work gets done—not just abstract policy issues.

Who owns which decisions across the funnel?

Which actions can be taken autonomously, and which require human review?

What data is considered authoritative when systems disagree?

How are outcomes measured consistently across functions?

How do teams trace why an action was taken and what impact it had?

When these questions are answered, AI systems stop feeling like black boxes. They become transparent participants in GTM execution. This is also where RevOps takes on an expanded role. As Agentic AI moves into execution, RevOps increasingly becomes the steward of governed autonomy, ensuring that data definitions, execution logic, and performance metrics remain aligned across marketing, sales, and customer teams.

Here, governance isn’t just a list of rules. It’s the common language that helps both autonomous systems and people work toward the same idea of success.

Governance Without Integration Still Slows Execution

Governance alone cannot solve fragmentation. It must be paired with integration. One of the most telling signals in the research is the gap between perceived readiness and true confidence. While 95% of leaders believe their tech stack can support Agentic AI, only 64% express strong confidence in that belief.

This gap exists because governance doesn’t work well in disconnected systems. If data definitions are different across tools, rules get applied unevenly. If execution logic is stuck in silos, oversight is always playing catch-up. If agents only see part of the picture, even good governance can lead to poor results.

Governed autonomy only works when everything is connected. Agentic AI needs a clear view across CRM, marketing, sales, analytics, and customer systems to act responsibly. That’s why integration isn’t just a technical detail—it’s the foundation that lets governance grow and autonomy speed up.

From Guardrails to Growth Levers

The most important shift GTM leaders need to make is not technical. It is conceptual. Governance should not exist merely to prevent mistakes. It should exist to enable outcomes.

Good governance makes things clearer, not more confusing. It removes the need for endless approvals by setting expectations early. It helps teams learn by making actions easy to track and measure. In well-run GTM organizations, governance becomes a tool for growth. It brings consistency, lets AI act confidently, and turns autonomy into an advantage instead of a risk.

This is how Agentic AI moves from pilot projects into the core operating fabric of GTM execution.

The Question Leaders Need to Ask Now

As Agentic AI becomes part of everyday GTM work, leaders have a big decision to make. Is governance there just to slow things down when risks show up, or is it built to help teams move faster and more confidently when results matter?

The organizations that succeed in the Agentic Era won’t be those with the fewest rules or the tightest controls. They’ll be the ones with the clearest ways of working. Governance won’t go away—it will become part of how things get done, almost without being noticed.

—

Agentic AI is moving GTM teams from just experimenting to actually running things on their own. The organizations that grow best will see governance not as a limit, but as the framework that lets AI act with confidence and control. To learn more about how GTM leaders are handling governance, autonomy, and readiness, download The 2026 State of Agentic AI in B2B GTM report.

Related Blogs